HashMap or sequential scan?

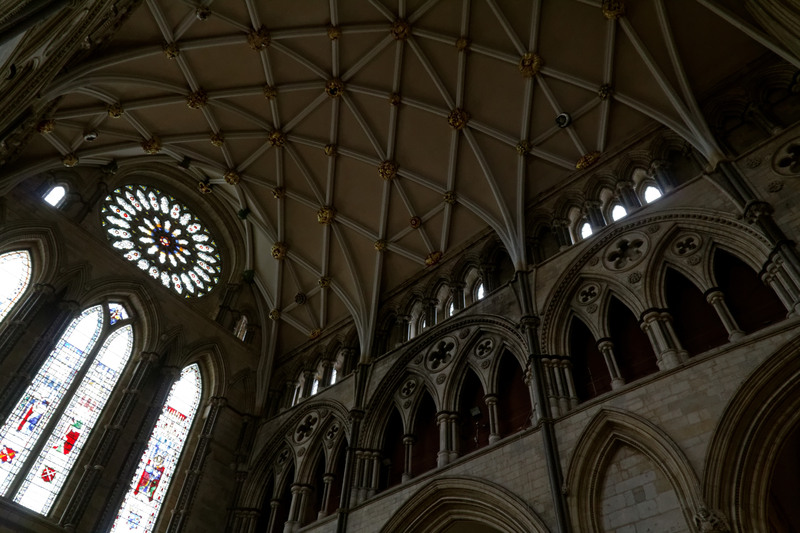

Arches, York Minster |

In Duke (an engine for finding near-duplicate database records) there is an interface is called Record, which represents a record to be indexed or compared against other records. The interface has the methods you would expect, such as the following:

/** * The names of the properties this record has. May be a subset of * the properties defined in the configuration if not all properties * have values. */ public Collection<String> getProperties(); /** * All values for the named property. May be empty. May not contain * null or empty strings. Never returns null. */ public Collection<String> getValues(String prop); /** * Returns a value for the named property. May be null. May not be * the empty string. If the property has more than one value there is * no way to predict which value is returned. */ public String getValue(String prop);

The obvious implementation is to store the contents of the record as a Map from String to Collection<String>, which is what Duke version 0.1 to 1.1 did. It works fine, is easy to implement, and works well. However, after version 1.1 I started doing more serious work on performance and scaling, and one of the things I looked at was memory usage.

Using less memory has several advantages: less time is spent on garbage collection, bigger data sets can be processed without having to access the disk (which is extremely slow), and CPU caches have more hits. So in many cases, using less memory automatically translates into higher performance.

But how can we use less memory? Well, arrays are about as compact as you can get in Java. So we can store the values as a single array of alternately property names and values. So that if we have the record foo=bar, baz=quux, then the string array will contain those four strings, in the same order as in this sentence. It's also dead easy to implement.

At this point, all the instincts of computer science-trained developers scream "O(n^2) performance!" The time needed to look up all the keys of a record is going to increase with the square of the number of properties. And we do that twice for every record, so clearly this is a real issue.

More arches |

However, the O() notation is a simplification, stripping away all factors in the true equation describing the performance, to leave only the one which will dominate as n grows very large. In other words, the O() notation is a guide to the behaviour for large values of n, but may be misleading for smaller values of n.

An excellent example of this is Quicksort, which has O(n * log n) performance, as compared to Insertion sort's O(n^2). It's well known that for smaller n, insertion sort is in fact faster, and so many Quicksort implementations switch to Insertion sort when the recursion comes down to small arrays. The exact value for n is not a constant. I've seen figures of both 7 and 20 being cited.

A typical Duke record will have between 3 and 10 properties, so it could well be that simple lookups will in fact be faster with the primitive implementation. However, this is just theory. The way to settle performance arguments is testing, so let's look at what happens in practice.

Testing it, what I find is that memory consumption with the compact record implementation is just 50% of that with the old implementation. Runtime performance varies a bit, but is better for most data sets, and slightly worse for a few. Runtime here combines the effects of searching with the memory compactness benefits, so that's the overall figure.

But what's the use of saving 50% memory if the speed is the same? Basically, that we have doubled the size of the data sets we can process with the in-memory backends to Duke. The in-memory backends are much faster than their disk-based counterparts (for obvious reasons), so this is a big win.

In short, although the compact implementation seems to fly in the face of everything that theory teaches us, it's obviously the best implementation. It's not that the theory is wrong, it's just that you need to apply it carefully.

Similar posts

7 tips on writing clean code

My job has required me to read quite a bit of code written by other people, and over the years I've found that there are some problems that tend to recur again and again

Read | 2007-05-03 13:34

Finally solving the performance problem

I wrote about the performance problems the tmphoto application had suffered from, and my failed attempts to fix them

Read | 2008-07-06 14:49

How Big Data will change IT

One of the changes that Big Data is going to bring to the IT world is a new emphasis on information

Read | 2013-10-03 19:00

Comments

No comments.