TMRA'05 — second day

(Second day of semi-live coverage from the TMRA'05 Topic Maps research workshop.)

Today we again start with Jack Park, this time speaking on "Just for Me: Topic Maps and Ontologies". The talk is about IRIS, a platform for personal knowledge management. It's based on Mozilla, but also has a Topic Maps implementation in it. The basic idea is to make ontologies usable for normal people, most of whom don't really understand ontologies. It also uses OWL, and according to Park "the brass at SRI is starting to wonder whether the ontology couldn't just be in the topic map".

The architecture has three layers: user interface, knowledge base, and information resources. There is a set of harvesters, which collect information from various sources (such as Google) and put it into the knowledge base.

The goal is to reduce cognitive overhead, by letting users use their own terms for concepts, relationships, etc. The personal naming does not interfere with interoperability. The topic map handles the identities, and the users can then add whatever names they like.

He then did a demo of IRIS and something called CALO that was part of it. What he said about it was remarkably reminiscent of the famous Scientific American article about the Semantic Web. CALO seems to be an extended email client which suggests for each email what projects it's related to, and asks you other questions about it that would tie the email into your topic map.

IRIS is LGPL-licensed.

Rebuilding Virtual Study Environments Using Topic Maps

Kamila Olsevicova was the next speaker, talking about the problems with existing e-learning environments, and how she thinks Topic Maps can solve these problems. Examples of existing products in this space are WebCT, LearningSpace, and Blackboard. Typical functions of existing systems are educational content delivery, communication, course management, student utilities, and miscellanea like course info handling, searching, etc.

Kamila Olsevicova |

She then showed the WebCT interface as an example. It looked very much like something that's useful for administering courses, but pretty primitive in terms of organizing the actual course contents.

She described the limitations as being a lack of subject-based organization of learning content, difficulties finding particular resources, no connection to other IT systems, and poor presentation on the web, since most material is just normal textbooks reformatted in HTML. This gives a linear style of presentation, etc.

They suggest integrating the contents of different courses, and connecting the study environment with other applications. The idea is of course to use Topic Maps for this. They've done a pilot study to demonstrate the idea, organizing the content for two related courses. They did three kinds of Topic Maps, one of information resources, one of the courses, and a domain map for the actual subject area. The pilot was shown in the Omnigator. They've used scope to filter the content. She showed the ontology, but I can't type fast enough to include even highlights of it.

She showed Omnigator screenshots of her topic map. Several people have done this, but for some reason they don't use the standard stylesheet, but instead use the older ones. One person had the toothpaste colours; she used the old orange look. Hmmmmm. No PSIs were visible in the topic map, but it was multilingual. They've included the ACM classification terms in the topic map to describe their courses. Individual lectures are described, together with exercises, resources, etc etc

Their conclusion seems to be that the project was successful, and it seems they plan to take the project further. This part was a bit too quick for me to follow. In the question session it emerges that they plan to create a custom interface based on the topic map. Interesting. There's definitely a lot of interest in Topic Maps in the e-learning area.

Collaborative Software Development and Topic Maps

Markus Ueberall (clearly the speaker with the coolest name :-) is covering this subject. He described problems and the state of the art in this area, but most of it went over my head, I'm afraid. This is a research-in-progress report, so they only have 15 minutes, and this might be part of the reason.

Markus Ueberall |

Their approach is to have different base ontologies for different phases in the software development. They also have a generic process model to guide the users through the phases, and they visualize this. So far they only support Java, and they use Eclipse as their platform. They use an existing ontology called ODOL, and they use Protégé as their editor.

He shows their basic ontology, using LTM syntax. It seems from the example that they do version control in the topic map. He then showed a diagram of the process model; it seems a key purpose of this is to ensure a shared conceptualization of they problem space between project members. More LTM shows that they include the project members, their roles, their tasks, etc in the topic map. They also connect Java classes with what seems to be a model of what the application being built is doing.

They want to implement several kinds of visualization, including not only the traditional graph visualization, but also UML diagrams, and semantically annotated source code. This looks like a major project to me, but extremely cool if they can actually pull it off.

A diagram shows some of the pieces they are using. Eclipse is the platform, they use TMAPI to connect to the topic map engine, they use the TMTab plug-in for Protégé, and they want to use Robert Barta's TMIP protocol for communications. TMCL is featured under "process control".

In the question session it emerged that he didn't know of the ATop topic map editor, which is an Eclipse plug-in. The Eclipse plug-in will be released as open source, he said, because they want feedback.

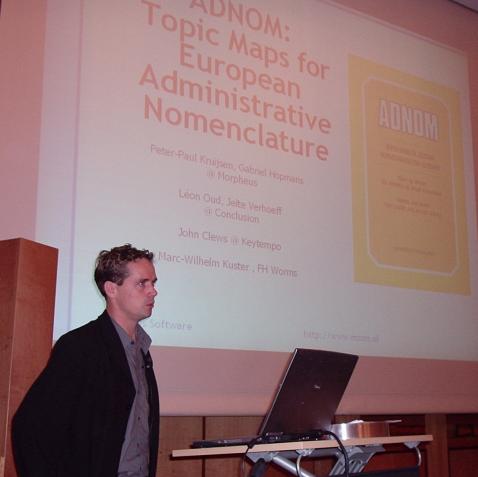

ADNOM

Gabriel Hopmans |

Gabriel Hopmans is next, speaking on an EU project between Morpheus, Conclusion, Keytempo, and FH Worms. It's about access to resources about European administrative nomenclature. The key deliverables are example data and partly the basic infrastructure. This is also a research-in-progress report, and so quite short. (There's a lot of detail on the administrative parts of the project; I'm skipping that. One interesting tidbit is that their secretary is Håvard Hjulstad of Standards Norway, who is registrar for ISO 639.)

Users are government employees all over the EU/EEC. The system must therefore be multilingual, must make it easy to find terms, and they need to avoid copyright issues and suchlike. The idea is to reuse existing terminology sources, where the main areas to be covered are administrative functions (based on COFOG), administrative jurisdictions (based on NUTS), and administrative organs (they will build this part themselves). They want the topic map to "act as a coat rack", so the purpose is to connect different types of information using the terminology topic map.

Again we see Omnigator screenshots, this time with the standard stylesheet. They have a thesaurus in there, and it's clearly converted from an existing one. Couldn't tell which as it flew by. His diagrams show how intend to connect together the different sources where they overlap. The diagram showed the administrative structures of the French and UK governments, respectively, with a link stating what the head of state is in each case. One screenshot shows a term with 26 translations in different scopes. (Good thing we reuse scope set collections in the OKS! :-) Danish Eurovoc then flew by in the Omnigator at low altitude.

They want to "use and extend OASIS PSI sets", get more exposure for PSIs in Europe, and finally to get funding for a bigger project to create a PSI registry. A separate slide discussed the possible impacts of ADNOM, and it's clear that they have big plans for pushing Topic Maps in EU government circles. Very cool!

There is an ADNOM mailing list people can subscribe to, by emailing a request to hhj at standard.no.

Chair Thomas Schwotzer cut off questions, as Gabriel spoke a little into the coffee break. Oh well. :)

tolog query algebra

I presented on this. This talk presented no changes to the tolog query language, but focused on the development of a formal query algebra for the language. This provides a formal underpinning for the language, which serves as a proper specification for it, and at the same time serves as the basis for optimizations and type inferencing. This was hard work to develop, and I'm very pleased with myself. :)

TMDM in T+

Lars Heuer |

Lars Heuer presents work he and Robert Barta did to represent TMDM in the T+ model. This is a crucial piece in the standards work, since without this TMQL and TMCL will not be working on TMDM. He walked through the mapping that they had done, using a set of very clear diagrams (at least if you know T+). It's too hard to reproduce this here, but I can recommend the slides. Unfortunately, their mapping has the same problems that plagued my mapping (which is in the ISO document registry as N0659). Their mapping is different, but the fact that it still gets the same problems is troubling. Not sure what to do about that.

TM/XML

This talk was by me again, on a natural XML syntax for Topic Maps that, unlike XTM, is nicely understandable by humans and easily processable with normal XML tools. It's probably best introduced by way of example. The following LTM:

[lmg : person = "Lars Marius Garshol"]

{lmg, homepage, "http://www.garshol.priv.no"}

[ontopia : company = "Ontopia"]

{ontopia, homepage, "http://www.ontopia.net"}

employed-by(lmg : employee, ontopia : employer)

turns into the following in TM/XML:

<topicmap xmlns:iso="http://psi.topicmaps.com/iso13250/"

xmlns:tm="http://psi.ontopia.net/xml/tm-xml/">

<person id="lmg">

<iso:topic-name><tm:value>Lars Marius Garshol</tm:value></iso:topic-name>

<homepage datatype="http://www.w3.org/2001/XMLSchema#anyURI"

>http://www.garshol.priv.no</homepage>

<employed-by topicref="ontopia" role="employee"/>

</person>

<company id="ontopia">

<iso:topic-name><tm:value>Ontopia</tm:value></iso:topic-name>

<homepage datatype="http://www.w3.org/2001/XMLSchema#anyURI"

>http://www.ontopia.net</homepage>

<employed-by topicref="lmg" role="employer"/>

</company>

</topicmap>

The full details, plus a RELAX-NG schema, are given in the paper.

Then it was lunch, and I came back just in time for the next presentation.

Navigating through archives, libraries, and museums

Salvatore Vasallo presented two Topic Maps projects (he listed three, but will only talk about two, apparently).

The first one is about taking user searches and analyzing them to find variants of topic names, so that wrong names can be connected to the right names. He does model these as actual names, but creates topics for the different kinds of names and associate them with associations. Omnigator and Vizigator screenshots fly by (old stylesheets); my impression was that some choices had been made to get the right presentation in Vizigator, but I could be wrong. There was some maths here, but I couldn't grasp it.

This is going too fast. He's on to the second project now, but I must either listen or type. A lot of good stuff on library data is flying past here, but I can't capture it.

Clearly other people were having more luck with this presentation (maybe because they weren't typing :-). Some people want him to do a set of PSIs as a design pattern for representing his mapping from FRBR to Topic Maps, which makes good sense to me.

Here there was a short break, as the next presenters struggled with their laptop.

Laptop difficulties |

MARCXTM

Lee Hyun-Sil |

The next speaker is Lee Hyun-Sil, from a Korean university. She is presenting a mapping from MARC, a standard for bibliographic data, to Topic Maps. MARC is actually an ISO standard: ISO 2709:1996. From what she says it sounds like MARC is a binary format. She's walking through the format, and it seems pretty complicated. Then we get a hex view: it is binary. A MARC21 format in XML has now been developed, and it's more than just a vocabulary; it's a complete framework reproducing MARC in XML. (There's lots more on this in her presentation than I'm capturing.) She shows an example, and it does look like an XML version of the binary thing we saw before.

Then we move to MODS: which is a subset of MARC21. It's comparable to Dublin Core, but richer. MODS also is closer to normal XML style than MARC21 is. (That figures, as MARC21 seemed a pretty direct translation of the binary format.)

They've mapped MARC21 to Topic Maps, and tried to support all of MARC21, with the same expressive power, while keeping it lightweight. The XTM examples seem to indicate that they've done an object mapping, so all of the artifacts of MARC are reproduced in Topic Maps. She shows examples in Vizigator, which don't look too bad. I'd prefer a semantic mapping, but this doesn't look too bad. She's out of time now, so we don't get to see that much detail. I want to read the paper.

They included the wording "XTM is inappropriate for representing MARC" on their last slide, which led to vigourous discussion. In the end it was agreed that they didn't mean it the way they wrote it, but that directly representing MARC in XTM was inappropriate.

LuMriX

Joachim Dudeck |

Joachim Dudeck is the next presenter. He's a medical informatics researcher, working on the searchability of documents. He's created a system called LuMriX, which can crawl, index, and search documents, and build a topic map from this. The system contains four static topic maps, build from existing terminology systems. (At this point the presenter's mobile phone rang. He answered it, saying "Hello?", much to the amusement of the audience. :) Anyway, they also have two dynamic topic maps. He shows screenshots, showing full-text searches into the system, and classification code results.

He also has an application with a drug list, containing drug names, substances, brand names, and connections to official drug information. This application has been well accepted at the hospital, enough so that people complain when it's not up. We see more screenshots, but too quickly for me to capture it. You can try it for yourself at LuMriX.net. Try searching for "ohr", which is "ear" in German.

There's more, but I can't follow all of it.

He's also worked on SNOMED, which seems to be quite big: 800,000 topics with 2 million associations. He shows a hierarchical diagram of it. He shows searching of this, too, and it's very fast. It's multilingual as well, and even supports Chinese.

The first question is what the storage system looks like. He says it's stored as a topic map, fully with topics, associations, occurrences, scopes, etc. He seems reluctant to divulge exactly how it works. He says more detail will be in the paper.

Visualizing Search Results

Thea Miller |

Thea Miller now presents a talk on metadata in cultural repositories. This means libraries, archives, and museums, I think. They are trying to create a common set of labelled categories, as an entry point to the resources. The idea is to use the categories to organize search results. So the user first does a search, gets lots of results, then groups them using the categories, and finally interprets them using relations coming from the topic map.

She showed the categories, which seemed similar to the Dublin Core elements, but they were not identical. She emphasized that the categories were not logical, but meant to be intuitive. They will be doing user testing to see to what extent the categories make sense to ordinary users.

As far as I can understand it they do the search, produce the result as XTM, produce an SVG visualization of this, and this is what the user sees. The actual prototype is built with C++, Perl, and XSLT. The SVG is produced with XSLT, it seems. She showed screenshots of the search result, which uses colours, size, and arrangements to indicate the differences and importance of each category. The source data is XML documents in TEI. (She said SGML, but what she's showing is XML; could be that they did a conversion to make it easier to display; not sure.)

Open space session

Hendrik Thomas on Merlino. This is a system which can take a topic map and use web search engines to attach occurrences into the topic map automatically. It's written in Perl. You upload a topic map into the system, which analyses the topic map, and then queries the search engines. The occurrence candidates are ranked, evaluated manually, and finally added to the topic map. There is a demo at topic-maps.org.

Alex Sigel on Semblogging with Topic Maps. The idea is to create a topic map that describes a blog, to get smarter content aggregation. Alex is working on this, creating use cases, and there is a student coupling bloxsom with TMAPI. Alex is also looking into using web services with it. He's creating an open source prototype from this.

Peter-Paul Kruijsen on Using PSIs in inferencing. Basically, it's about the relationship between inference rules and instance data in a topic map. He's collecting requirements and really wants to do something about this. There was vigourous discussion of this as well, but I was too involved to capture it.

Rani Pinchuk: AIOBCT — Q/A over TM. This is an ESA project to create a question answering system. It's populated with data from two subsystems of the Columbus operations manual. This is implemented with a prototype Topic Maps engine which uses the Toma query language. It supports a wide range of natural language queries.

Lutz Maicher: TMEP. He wants to document the principles by which a topic map is created. A schema is not enough for this, as the editorial guidelines also need to be document. Lutz has created something he calls TMEP is a topic map which describes the process by which the topic map modifications are motivated. He showed a huge LTM example which seemed effectively like a program directing an interaction with the user. The idea is that this can drive an interactive editing interface. He has an implementation which uses the console.

Gabriel Hopmans with two slides on ADNOM. This was mostly best practice questions that were debated in the audience.

Lars Heuer on AsTMa= version 2.0. This is being created by him and Robert Barta. It's not fully done yet, but 90% there. The slides really contained an AsTMa= tutorial. Retyping it here is just too much work, so you have to see the slides. Sorry. One cool thing is that they have a mechanism for association templates. Lars has also written a Java parser based on TMAPI, which will be open source.

In closing, Lutz Maicher, welcomed us to TMRA'06 in Leipzig in October 2006. He also said that the slides would be going up on the web site.

Similar posts

Topic Maps and Semantic Search

Tonight was another one of the monthly users' group meetings on Topic Maps, and tonight the subject was Topic Maps and Semantic Search

Read | 2007-01-30 16:14

ISO meeting in Leipzig

Since nearly all the key people in the ISO committee were going to be in Leipzig anyway for TMRA 2006 it was decided to have an ISO meeting in conjunction with the conference

Read | 2006-10-15 17:57

Norwegian Topic Maps Users' Group Meeting

The Norwegian Topic Maps Users' Group had one of its irregular meetings tonight, with talks on various subjects, plus updates on the administrivia of the users' group itself

Read | 2006-08-16 21:43

Comments

No comments.