How Big Data will change IT

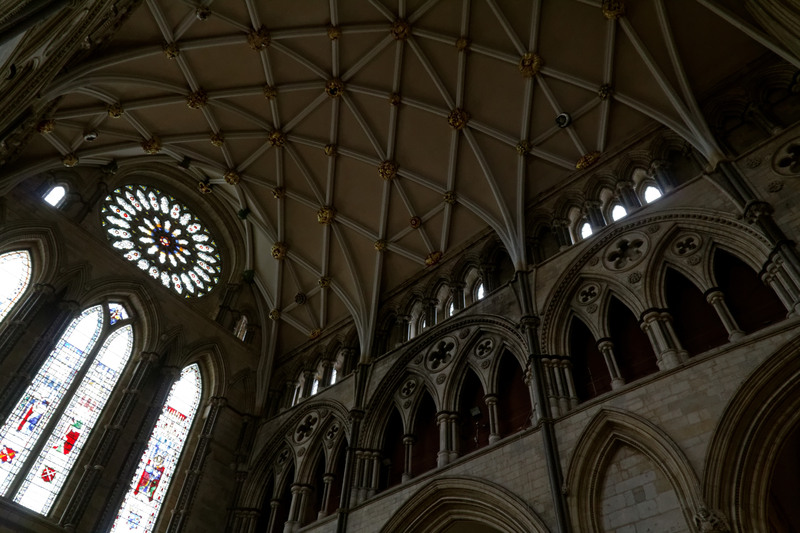

York Minster |

One of the changes that Big Data is going to bring to the IT world is a new emphasis on information. For as long as I've worked in IT people have focused on code, algorithms, user interfaces, and functionality, leaving information as an afterthought. I've even worked in projects where system architecture and information architecture are handled by separate teams, with systems architecture not only in the driving seat, but pretty much ignoring the information part entirely.

This is, of course, absurd. Information is the key part of any IT system, and always has been. Decades ago, Fred Brooks wrote: "show me your tables, and I won't need your flowcharts." That is, the shape of the underlying information determines everything else. That still hasn't changed. When you create a system, the available information decides what you can do. If you haven't got the necessary information, you just can't do it. Similarly, when trying to decompose a big system into modules, it's the data dependencies that determine which modules can be split out, and which cannot.

With the rise of Big Data there is reason to believe that this attitude may change, and that the emphasis in IT may turn to the information side, where it should have been all along.

Research becomes relevant again

A decade ago, Rob Pike lamented that while lots of great things were coming out of academic IT research, the people building solutions for real users were paying it no attention at all. Well, that has now well and truly changed. The whole Big Data/Machine Learning trend is all about applying techniques from academic research in the real world. There is much, much more where these techniques came from, and people who can use these techniques have a huge advantage over those who cannot.

Even something as seemingly simple as the Levenshtein string distance measure has a huge research literature around it, going into faster algorithms, use of finite state automata for fast Levenshtein searches in search engines, q-gram indexes for Levenshtein lookup, etc etc. Similar literatures exist on a huge number of topics, all of them full of potentially enormously useful techniques.

In fact, knowing the precise formal properties of your computational problem is a key that can unlock (or lock) the door to masses of useful research. I'll give an example. Let's say you're trying to find objects that are similar to an input object. The input can be a photo, a database record, or a document, or anything. It turns out that if your similarity measure is a true metric (ie: it obeys the triangle inequality; Levenshtein is one such measure) then the set of objects forms a metric space, and there's a huge set of algorithms that can be used for fast searching.

It's obvious that anyone who can make use of all this has a huge advantage, and Google is an excellent example of a company that's doing exactly that.

19th century high technology |

The many uses of machine learning

Machine learning techniques are beginning to creep into areas other than data science, too. One example is using machine learning to learn the optimal configuration for a search engine. Another is using machine learning to identify outliers in data sets, to find erroneous data values. A third might be having the machine learn to identify log messages that indicate serious problems, to distinguish them from ordinary messages that require no action.

A related example is using record linkage techniques, which allow data sets to be linked even without common identifiers. This makes it possible to take an initial data set and connect it to other related data sets to enrich the data you have available to work with. Since what data you have available determines what you can do (as pointed out above), this can make an enormous difference.

I think these examples are just the beginning, and that we will see much more of this, as people start to see how these techniques can be used to solve all kinds of problems. And, again, having access to this huge toolchest is going to be a big advantage.

Celtic cross, Whitby, UK |

The challenge for practitioners

For developers and architects, the challenge here is that all of these changes require us to learn not just new skills, but new skills of a kind that many find hard to acquire. Many developers and architects find working with information intimidating, for reasons that are not entirely clear to me, but may have to do with the formality of modeling. Similarly, the research is mostly described in terms of formal math, a language foreign to most developers and architects.

At the same time, many universities have changed their curricula from a more purely formal computer science content, emphasizing exactly these skills, to a simple software engineering course. The trouble is that software engineering is not really a fully developed discipline yet, and the useful parts of it are not too hard to acquire on the job, but learning graph theory and linear algebra by osmosis is beyond most of us. And now suddenly many people are required to do just that.

So the IT profession is changing, and it's changing in ways that the profession is not well prepared for. Presumably this is good news for the few people who have or can acquire the right sorts of skills, but probably not for anyone else, in the short term.

Similar posts

Thoughts on Big Data

Big Data has really caught on as a buzz word, even well outside the technology world, with journalists writing columns on its consequences for privacy, research, and so on

Read | 2013-09-11 15:06

Big Data and the Semantic Web

This was the theme for ESWC 2013, so it's clearly a subject on people's minds

Read | 2013-10-13 12:00

Impressions from Strata London 2013

The first thing that struck me about the conference was that it had to be a fairly new conference

Read | 2013-11-20 08:44

Comments

Dave Pawson - 2013-10-04 03:03:27

Thought provoking Lars. Timely after I was pointed at http://graphdatabases.com/ last night. Thanks.